Part of Mingei’s digitization process is the creation of virtual avatars that represent the craftsmen and the intangible elements of heritage crafts. Virtual avatars are the result of 3D construction. In the field of computer graphics and computer vision, 3D reconstruction concerns any procedure that includes the capturing of the shape and the appearance of real objects. In the context of Mingei, MIRALab Sarl works on face and body reconstruction of real people, in order to create a natural-looking 3D virtual character identical to them.

Why use virtual avatars?

In the context of digitized cultural heritage, virtual humans can help with immersing the users in the virtual environments where the craft is presented, as it makes the craft procedures more interesting for them. Several studies highlight the advantages of virtual avatars, as they can elicit better results with regard to social presence, engagement and performance. There are studies where users favored interacting with an agent capable of natural conversational behaviors (e.g gesture, gaze, turn-taking), rather than an interaction without these features. Moreover, it has been demonstrated that an embodied agent with locomotion and gestures can positively affect users’ sense of engagement, social richness and social presence. Finally, with respect to engagement, participants have claimed that they can better recall stories of robotic agents when the agent looked at them more during a storytelling scenario. The use of virtual avatars is thus a step towards the preservation and representation of heritage crafts.

The creation process

The creation of those virtual avatars is divided in the following steps:

1. 3D scanning and image acquisition

2. 3D points and texture reconstruction

3. Standard topology wrapping

4. Blendshapes generation

5. Body integration

6. Lip syncing setup

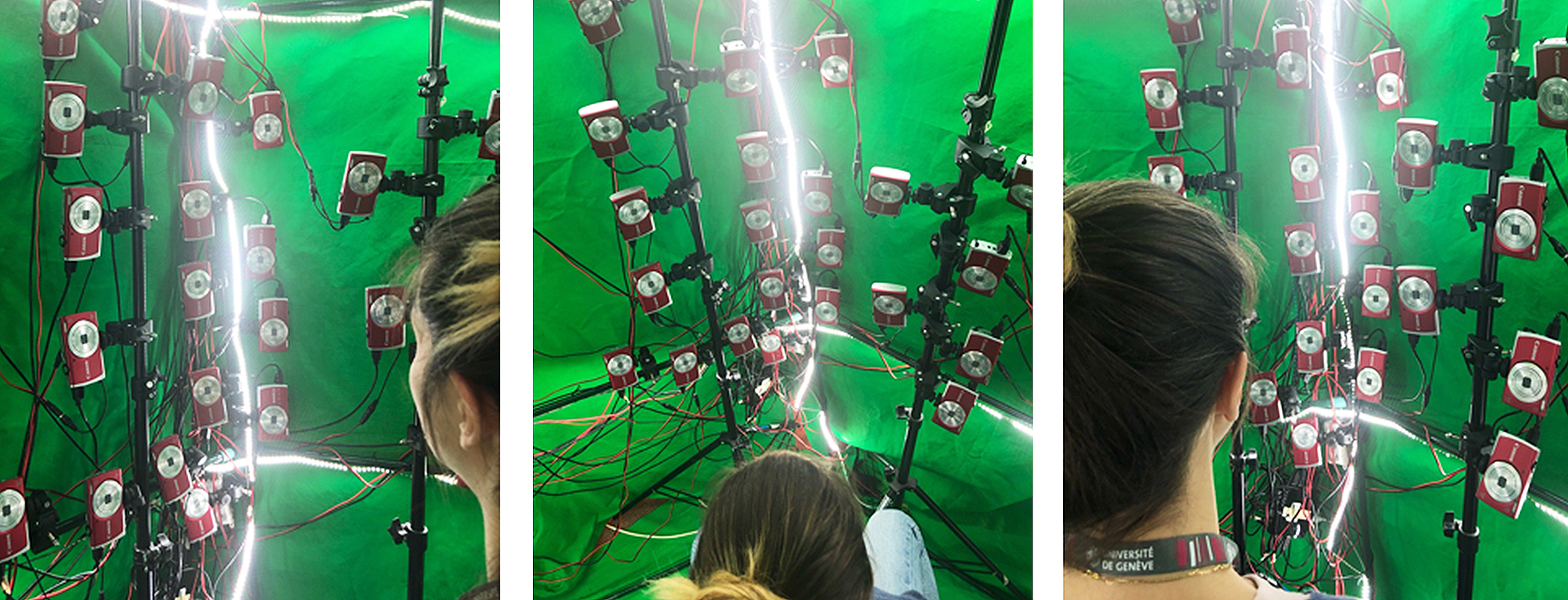

For the first step, an image-based full body and 3D face scanner was used. We decided to test first the face reconstruction, so we adapted all the cameras to the face position as shown in the pictures below.

Image acquisition is the information source of the 3D reconstruction. Sixty 2D digital images were acquired by 60 compact cameras synchronized and controlled by a single computer. All photos have been taken into a specific restricted area with green background (as shown in figure 1) which allows us to edit the photos and increase the quality. For this processing stage, we used Matlab and thus, we extracted all this green background. The transformed photos, called masks, are inserted into the Agisoft Metashape tool, in order to improve the reconstruction accuracy. We used photos of all the vowels as shown in Figure 2. Therefore, we had a separate representation of each one of them.

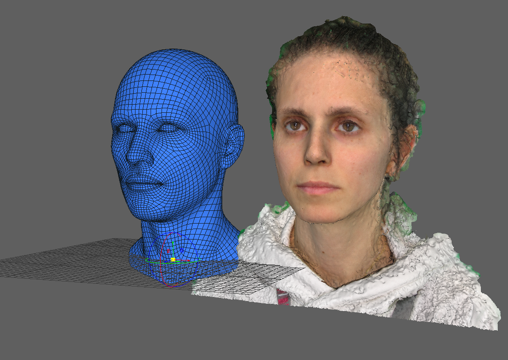

As a third step, we proceeded with the retargeting of the head textures and the geometry onto a standardized head. We worked in a predefined area of the head which is the front part. To this end, the Wrap3D or Russian3DScanner tool were used, to adjust the topology of the face to a standardized head (the blue one).

The fourth step concerns the ability of the avatar to “speak”, as well as have some facial expressions. The goal of this step is the generation of blendshapes, which are necessary for the lip synchronization. Blendshapes in general is a term for morphing. It is a way of deforming the geometry to create a specific look or shape for the mesh. For example, on a virtual character’s face various facial expressions can be created, that can then be blended so as to acquire the desired result. Blendshapes are the most popular method for generating facial animations.

So, for each expression we created during the second and third step, we used the Optical Flow, which is part of the Wrap3D tool, to deform the standardized head in terms of the vowels’ geometry. The goal was to maintain the texture of the head mesh.

The final step of the face reconstruction was the lip-syncing procedure as mentioned above. Lip syncing is a technical term for matching a person’s lip movements as they are speaking, with prerecorded spoken vocals that a listener can hear. In our case, a Unity plugin was used, called LipSync Pro. Thus, before starting with this process, we exported all the previous data as Blendshapes and we integrated them in a Unity project.

We subsequently proceeded with the analysis of the audio signal of the speech, and we extracted the phonemes and placed them in the audio in a specific timing to reproduce the lip syncing from the Blendshapes.

Finally, when the head was ready, we integrated it to a body, creating a full avatar as can be seen in Figure 4.

Having the avatar ready, we then proceeded to test on it some of the animations that were the product of motion capture (MoCap) excecuted by Armines at the Haus der Seidenkultur in Krefeld. For this, the MoCap files needed to be converted from BVH to FBX form, and the Avatar needed to be configured to have a humanoid skeleton in Unity. Finally, there is a correspondence in the joints of the skeleton of the MoCap and the ones of the Avatar. The avatar can now move according to the motion capture of the craftsmen at Haus der Seidenkultur.